Flow Matching and Diffusion Models

July 28, 2025 Leave a comment

I transcribe MIT 6.S184: Flow Matching and Diffusion Models – Lecture – Generative AI with SDEs. I try to do this using Tikz diagrams too.

tl:dr

- Ordinary Differential Equations have to be studied separately.

- Stochastic Differential Equations is a separate subject.

- I believe that I need to solve the exercises by coding to understand everything reasonably well.

Lecture 1

Conditional generation means sampling the conditional data distribution.

Generative models generate samples from data distribution.

Initial Distribution :

Default is

Flow Model

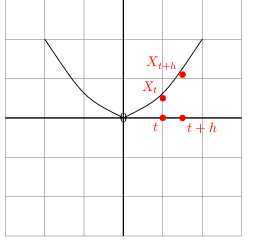

Trajectory So for each time component, t , we get a Vector out.

Vector Field. (There is a space component and Time component )

Flow

means for every initial condition I want this to be a solution to my ODE.

which is the initial condition

.The time derivative of is

Neural Network.

Random Initialization

Ordinary Differential Equation ( Time Derivative)

Goal Simulate to get

This means that Flow is a collection of Trajectories that conform to the ODE

Diffusion Model

Stochastic Process

.

is a random variable

Vector Field. + Differential Coefficient

Stochastic Differential Equation

The following means that the change of in time is given by the change of direction of the Vector field

Brownian Motion

Stochastic Process and in this case the time can be infinite. We don’t have to stop at

- It has Gaussian increments. What does it mean ?

These are two arbitrary time points and t is before s, and Variance of the Gaussian Distribution varies linearly with time.

3. Independent increments. This means that

So at this stage, in order to understand the following, I need a book or another course in ODE’s

This means the trajectory with ODE is equivalent to the timestep

plus h times the direction of the vector field

plus a remainder term that can be ignored.

How are derivatives defined ?

This is the basic definition that I have to understand by learning Calculus.

Derivative of a trajectory

And by applying linear algebra we get the ODE shown above( this section ).

Ordinary Differential Equation to Stochastic Differential Equation

This is the recap. We found a term that doesn’t depend on the derivatives that can be specified with an error term. is the diffusion coefficient used to scale the Brownian Motion. If

is zero, it is equivalent to the original ODE.

Why do we need Brownian Motion ?

I didn’t really follow this. But the answer given was this. The Brownian Motion is equivalent to the Gaussian Distribution as far its universal value is concerned

Lecture 2

Reminder of what was covered in Lecture 1

Deriving a Training Target

Typically, we train the model by minimizing a mean-squared error

In regression or classification, the training target is the label. Here we have no label. We have to derive a training target.

The professor states that you don’t have to understand all the derivations. I anticipate some mathematics I haven’t studied earlier.

We have to make sure we understand the formulas for these.

The key terminology to remember are the following.

Conditional and Marginal Probability Path

This dirac distribution is not what I understand as of now. But it seems to return the same

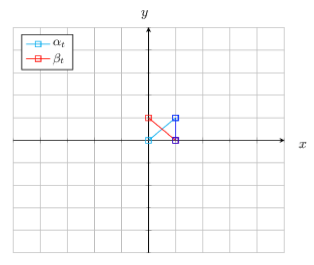

Example : Gaussian Probability Path

}

The diagram is small. But the idea is that when Time is 0, mean is 0 and variance is 1 which is and when Time is 1, mean is

and variance is 0.

The distributions with variance 1 is dirac,

Example : Marginal Probability Path

Well. This is not clear at this stage. But we take one data point(sampling) from and marginal probability path means that we forget it.

. As far as I understand the data distribution and conditional distribution leads to the marginal path.

The density formula is not clear at this stage.

All of this seems to describe that we move from noise to our distribution of the data we are dealing with.

Conditional and Marginal Vector Field

Conditional Vector field

We want to condition such that starting from initial point

then the distribution of t at every time point is given by this probablity path

We simulate the ODE like this.

Example : Conditional Gaussian Vector field

is the time deritive and is from Physics

Marginalization trick

The marginal vector field is

Not very clear at this point but the following is the application of the bayes rule to look at the posterior distribution. What could have been the data point from the point set Z that gave rise to X ?

then the distribution of t at every time point is given by this marginal path

Conditional and marginal Score function

Conditional Score

Marginal Score

Derivation

Substituting the forumula show previously we get this after moving the gradient inside the integral.

Using the result

What is the score of the Conditional Gaussian Vector Field ?

This derivation is out of range for me at the moment but the instructor mentioned this.

Theorem : SDE extension trick

Then for any diffusion co-efficient

is the noise injected.