I came across this calculation when I was reading about Recommender systems. The last column is the rating given by a particular user for a movie. The other columns of this matrix denote whether a particular actor appeared in the movie or not.

The first five attributes are Boolean, and the last is an integer "rating." Assume that the scale factor for the rating is α. Compute, as a function of α, the cosine distances between each pair of profiles. For each of α = 0, 0.5, 1, and 2, determine the cosine of the angle between each pair of vectors.

My R code to calculate is this.

# TODO: Add comment

#

# Author: radhakrishnan

###############################################################################

A = matrix(c(1,0,1,0,1,2,

1,1,0,0,1,6,

0,1,0,1,0,2),nrow=3,ncol=6,byrow=TRUE)

rownames(A) <- c("A","B","C")

scale1 <- A

scale1[,6] <- A[,6] * 0

print( paste( "A and B is ", ( sum(scale1[1,] * scale1[2,]) )/( sqrt( sum(scale1[1,]^2) ) * sqrt( sum(scale1[2,]^2) ) )) )

print( paste( "B and C is ", ( sum(scale1[2,] * scale1[3,]) )/( sqrt( sum(scale1[2,]^2) ) * sqrt( sum(scale1[3,]^2) ) )) )

print( paste( "A and C is ", ( sum(scale1[1,] * scale1[3,]) )/( sqrt( sum(scale1[1,]^2) ) * sqrt( sum(scale1[3,]^2) ) )) )

scale2 <- A

scale2[,6] <- A[,6] * 0.5

print( paste( "A and B is ", ( sum(scale2[1,] * scale2[2,]) )/( sqrt( sum(scale2[1,]^2) ) * sqrt( sum(scale2[2,]^2) ) )) )

print( paste( "B and C is ", ( sum(scale2[2,] * scale2[3,]) )/( sqrt( sum(scale2[2,]^2) ) * sqrt( sum(scale2[3,]^2) ) )) )

print( paste( "A and C is ", ( sum(scale2[1,] * scale2[3,]) )/( sqrt( sum(scale2[1,]^2) ) * sqrt( sum(scale2[3,]^2) ) )) )

scale3 <- A

scale3[,6] <- A[,6] * 1

print( paste( "A and B is ", ( sum(scale3[1,] * scale3[2,]) )/( sqrt( sum(scale3[1,]^2) ) * sqrt( sum(scale3[2,]^2) ) )) )

print( paste( "B and C is ", ( sum(scale3[2,] * scale3[3,]) )/( sqrt( sum(scale3[2,]^2) ) * sqrt( sum(scale3[3,]^2) ) )) )

print( paste( "A and C is ", ( sum(scale3[1,] * scale1[3,]) )/( sqrt( sum(scale3[1,]^2) ) * sqrt( sum(scale3[3,]^2) ) )) )

scale4 <- A

scale4[,6] <- A[,6] * 2

print( paste( "A and B is ", ( sum(scale4[1,] * scale4[2,]) )/( sqrt( sum(scale4[1,]^2) ) * sqrt( sum(scale4[2,]^2) ) )) )

print( paste( "B and C is ", ( sum(scale4[2,] * scale4[3,]) )/( sqrt( sum(scale4[2,]^2) ) * sqrt( sum(scale4[3,]^2) ) )) )

print( paste( "A and C is ", ( sum(scale4[1,] * scale4[3,]) )/( sqrt( sum(scale4[1,]^2) ) * sqrt( sum(scale4[3,]^2) ) )) )

> source(“/Users/radhakrishnan/Documents/eclipse/workspace/MMDS/cosinedistance.R”, echo=FALSE, encoding=”UTF-8″)

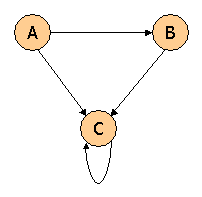

[1] “A and B is 0.666666666666667”

[1] “B and C is 0.408248290463863”

[1] “A and C is 0”

[1] “A and B is 0.721687836487032”

[1] “B and C is 0.666666666666667”

[1] “A and C is 0.288675134594813”

[1] “A and B is 0.847318545736323”

[1] “B and C is 0.849836585598797”

[1] “A and C is 0”

[1] “A and B is 0.946094540760746”

[1] “B and C is 0.95257934441568”

[1] “A and C is 0.8651809126974”