We use a scheduler/file transfer tool from a smaller vendor to avoid costs and huge upfront investments. This financial project has very strict SLA’s. Would a more expensive solution help ?

There are many open-source tools that can do the same thing. We used WebSphere portal that works but I have also seen a open-source stack built using open-source WSRP etc. that can be used to build portals.

Actually there is one more fundamental problem. That is the company’s technology culture. If the culture is not conducive to technical software development or testing then good quality or scalability cannot be ensured.

Basically I think that delivering good SLA’s means clusters, performance testing, HA etc.

Not an expensive tool. Even a very expensive tool needs strong testing teams and a test environment that matches the production environment. So when the management does not understand fundamental testing principles we are going to be in trouble.

Recently we faced one of the many problems that are stopping us from delivering our software. The scheduler that we use is from Flux. Due to a browser upgrade issue the web console of this tool would not open in our production servers. Monitoring became harder.

Red tape ensured that this situation could not be overcome easily. Usually tools have facilities to manipulate the runtime through backend code.

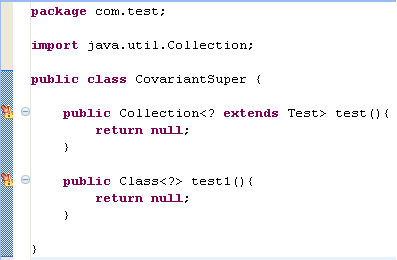

WebSphere Portal has XMLAccess. The Flux engines forming a cluster can be accessed using

the following Java code. This code was able to recover a failed Flow chart and saved the day.

I feel that if the people who we are working with are not good, the best and the most expensive tools in the world cannot help us deliver good software.

So in the end it was working code that fixed the problem.

package com.test;

import java.rmi.NotBoundException;

import java.rmi.RemoteException;

import flux.Cluster;

import flux.Engine;

import flux.EngineException;

import flux.EngineInfo;

import flux.Factory;

import flux.FlowChart;

import flux.FlowChartIterator;

public class LookupEngine {

public static void main( String... argv ) throws IllegalArgumentException, RemoteException, NotBoundException, EngineException{

assert( null != argv[ 0 ] );

assert( null != argv[ 1 ] );

assert( null != argv[ 2 ] );

Factory factory = Factory.makeInstance();

LookupEngine le = new LookupEngine();

le.

lookupCluster( factory,

argv[ 0 ],

argv[ 1 ],

argv[ 2 ]);

}

private void lookupCluster( Factory factory,

String host,

String namespace,

String bindName ) throws IllegalArgumentException,

NotBoundException,

EngineException,

RemoteException{

Cluster cluster =

factory.lookupCluster( host,

1099,

bindName,

"user",

"password" );

Engine engines = null;

for( Object engine : cluster.getEngines() ){

System.out.println( engine );

engines = ((EngineInfo)engine).createEngineReference();

}

FlowChartIterator iterator = null;

for( iterator = engines.get() ; iterator.hasNext() ; ){

//Get FlowChart name

System.out.println( (( FlowChart )iterator.next() ).getName());

}

iterator.close();

//So once we find the name of the FlowChart we can recover it.

//engines.recover( "/NameSpace/FlowChart" );

}

}

I have not posted anything for a long time because I moved to chennai and started working for the payment card industry. Now I am working on a merchant acquiring system. This type of industry has strict SLA’s and a high level of security governed by PCIDSS and many other specifications.

I have not posted anything for a long time because I moved to chennai and started working for the payment card industry. Now I am working on a merchant acquiring system. This type of industry has strict SLA’s and a high level of security governed by PCIDSS and many other specifications. Creating a virtual machine and running OpenSolaris 10 on Windows XP is really cool even though I have seen a VMWare installation in 1999. Two things that piqued my interest are

Creating a virtual machine and running OpenSolaris 10 on Windows XP is really cool even though I have seen a VMWare installation in 1999. Two things that piqued my interest are